Navigating AI Testing: Common Pitfalls and Solutions

Written on

Chapter 1: Understanding AI and Machine Learning

When engaging with machine learning (ML) and artificial intelligence (AI), it’s beneficial to adopt a teaching mindset. Essentially, the goal of ML/AI is to enable your algorithm to learn tasks through examples rather than precise instructions.

As any educator will tell you, the quality of your examples is crucial. The complexity of the task dictates the number of examples required. For reliable results, the testing phase is equally vital.

Given the importance of testing in ML/AI, it’s unfortunate that it can often be challenging. Let’s examine two common testing errors: one that beginners frequently make and another that even seasoned practitioners can fall victim to.

Section 1.1: Beginner Mistakes in AI Testing

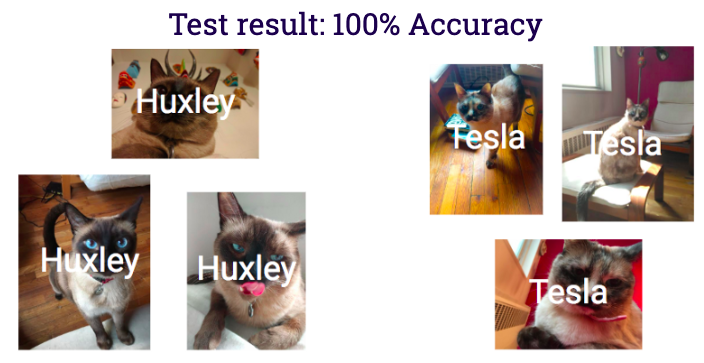

Consider a scenario where I’m developing an AI system to identify images of bananas and my two cats, Huxley and Tesla.

Building an image recognition model demands thousands of images—each of the seven samples serves as a placeholder for 10,000 similar photos. After inputting 70,000 images into the AI algorithm, we observe satisfactory training results. But does the model truly perform well?

The real test begins now!

Have you noticed the issue with our choice of 60,000 test images? They look suspiciously familiar. By ignoring our doubts, we submit them without labels to determine if our model can accurately classify them. Surprisingly, it achieves 100% accuracy!

However, just because there are no apparent mistakes doesn’t mean the system is trustworthy. To validate its reliability, we need to assess its performance with new data, not recycled examples. This scenario demonstrates a significant oversight—reusing training data led to a misleadingly high accuracy.

This situation parallels testing human students with questions they've already encountered. Without a fresh set of problems, we can’t gauge their understanding or ability to generalize—this distinction is crucial for effective machine learning.

The challenge with memorization lies in its inability to predict future scenarios. Just as it’s foolish to test humans on familiar material, it’s even worse for machines. Given the intricate nature of ML and AI, detecting overfitting and memorization requires an untouched dataset.

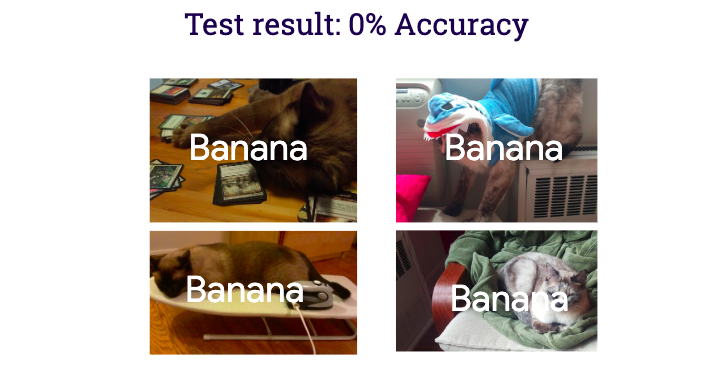

When developing ML solutions, your goal should be to create an accurate future simulator. Thus, a more effective testing set would consist entirely of examples that the system hasn’t previously encountered. Let’s consider testing with 40,000 new images!

How did it perform?

In theory, it would be quite unusual for the test labels to all yield the same result, especially since that category is the minority. In cases of unbalanced datasets, failures are more likely to arise from majority or random labels. Yet, it turns out our system is performing poorly. Because we used new data, we could accurately assess its performance.

Section 1.2: Identifying Missteps in AI Testing

Achieving a 0% accuracy score is indeed strange. A dataset of 70,000 high-quality images should not lead to such dismal results. Typically, a score of 0% signifies a significant error, likely stemming from using a test dataset that doesn’t match the training data in format or context.

While I’m not suggesting you need to know how to manage such disastrous results, understanding the importance of achieving a score above zero is crucial. The goal is to surpass a predetermined benchmark, ideally exceeding random guessing.

To avoid novice mistakes, always ensure that your tests are conducted on data that is untouched and has never been accessed by the ML/AI system or its developers. This practice helps you dodge overfitting, akin to a student who memorizes their exam answers.

To achieve this, it’s essential to have pristine data available. One way to secure this is by committing to future data collection—a task that may prove challenging. If you’re fortunate enough to have a steady influx of new data, recognize that not everyone shares this advantage.

If collecting data isn’t feasible, make it a priority to address testing concerns at the outset of each project. By partitioning your data and keeping some locked away until the final evaluation, you can avoid the pitfalls that many teams have encountered by testing on training data.

The Most Effective Practice in Data Science

A straightforward method to distinguish useful patterns from distractions is imperative for successful data science endeavors.

Now that we’ve tackled the beginner mistake, we’ll shift our focus to the expert mistake in the next section.

Chapter 2: The Expert's Misstep

In the first video, "Why AI Can't Pass This Test," we explore the limitations of AI systems in various testing scenarios, shedding light on common pitfalls in AI evaluation.

The second video, "No B.S Guide to AI In Test Automation," provides a straightforward approach to implementing AI in testing automation, focusing on practical solutions for common challenges.

Thanks for reading! Interested in further learning?

If you enjoyed this content and seek a fun, applied AI course suitable for all levels, consider checking out the course I’ve developed.

Let’s connect! You can find me on Twitter, YouTube, Substack, and LinkedIn. If you’re interested in having me speak at your event, please reach out using this form.