Efficient Incremental Training of Large Datasets Using Dask

Written on

Chapter 1: Introduction to Incremental Learning

In today's digital landscape, the exponential growth of data presents unique challenges for machine learning, especially when working with extensive datasets. Traditional in-memory processing techniques often fall short due to limitations in system memory and computational resources. To address these challenges, incremental training emerges as a viable strategy, processing data in smaller, manageable portions rather than attempting to load entire datasets into memory at once.

Dask, a powerful parallel computing library in Python, provides an ideal framework for implementing incremental training. By allowing data scientists to process large datasets effectively, Dask helps overcome memory constraints, making it easier to train machine learning models.

The wisdom of dividing and conquering large data challenges lies in taking small, incremental steps.

The Challenge with Large Datasets

Dealing with large datasets can be daunting due to the limitations of physical memory and computational power. Standard data processing libraries like Pandas and NumPy are built for in-memory operations, which become impractical when data exceeds available system memory. This situation underscores the urgency for scalable solutions capable of adapting to large-scale data processing needs.

Introduction to Dask

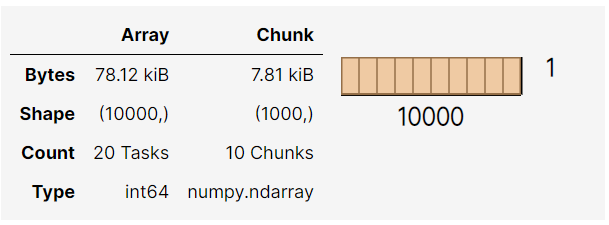

Dask offers a flexible and scalable approach to parallelizing existing Python tools and workflows. Unlike traditional methods requiring full dataset loading, Dask processes data in smaller, partitioned blocks. This capability enables operations on datasets that surpass the memory capacity of the machine. Furthermore, Dask integrates seamlessly with popular Python libraries, including Pandas, NumPy, and Scikit-learn, providing a familiar environment enhanced by scalability.

Incremental Training with Dask

Incremental training entails sequentially training a model on small portions of data, enabling continuous adaptation and improvement with each data chunk. This method is particularly beneficial for large datasets and online learning scenarios. Dask simplifies incremental training through its Incremental wrapper, which allows partial fit methods to be applied to Dask collections. This strategy aligns well with online learning algorithms, such as stochastic gradient descent (SGD).

Implementation and Workflow

The workflow begins by loading the dataset into a Dask DataFrame, effectively partitioning the data into manageable segments. Data preprocessing and transformations can then be executed in parallel across these segments. The Incremental wrapper facilitates the sequential training of machine learning models, such as SGDClassifier, on these partitions. This approach significantly reduces memory usage and computational load, avoiding the need to load the entire dataset into memory simultaneously.

Advantages of Incremental Training in Dask

The benefits of incremental training with Dask are substantial. It enables the processing of datasets too large for memory, reduces computational strain by handling data in chunks, and supports online learning where models must continuously adapt to new inputs. Additionally, Dask's parallel processing capabilities can enhance computation speed, making it a powerful tool for large-scale data analysis and model training.

Code Example

Here is a complete Python code snippet demonstrating how to perform incremental training on a large dataset using Dask:

import dask.dataframe as dd

import pandas as pd

from dask.array import from_array

from sklearn.datasets import make_classification

from dask_ml.wrappers import Incremental

from sklearn.linear_model import SGDClassifier

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

from dask_ml.model_selection import train_test_split

# Generate a synthetic dataset

X, y = make_classification(n_samples=100000, n_features=20, random_state=42)

df = dd.from_pandas(pd.DataFrame(X), npartitions=10)

# Ensure all column names are strings

df.columns = df.columns.astype(str)

# Convert the target to a Dask array and add it to the DataFrame

y_dask = from_array(y, chunks=len(y) // 10)

df['target'] = y_dask

# Feature engineering: Add a synthetic feature

df['synthetic_feature'] = df['0'] * df['1']

# Split dataset into training and testing

X_train, X_test, y_train, y_test = train_test_split(df[df.columns[:-1]], df['target'], test_size=0.2, shuffle=True)

# Initialize the Incremental model with SGDClassifier

model = Incremental(SGDClassifier(max_iter=1000, tol=1e-3))

# Fit the model incrementally

model.fit(X_train, y_train, classes=np.unique(y))

# Predict and evaluate the model

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test.compute(), y_pred.compute())

print(f'Accuracy: {accuracy}')

# Plotting the results

fig, ax = plt.subplots()

ax.plot(y_pred.compute(), label='Predictions')

ax.plot(y_test.compute(), label='Actual')

ax.set_title('Actual vs. Predicted')

ax.legend()

plt.show()

This code illustrates the process of incrementally training a machine learning model on a large dataset using Dask. Each section of the code serves a specific purpose, from importing necessary libraries to generating synthetic data and preparing it for training.

The plot titled “Actual vs. Predicted” visually compares the true labels with the model's predictions. While an accuracy score of 1.0 may seem ideal, it raises questions about the model's performance, particularly regarding potential overfitting.

The first video, "Scalable Machine Learning with Dask," delves into techniques for efficiently scaling machine learning models using Dask, providing valuable insights into its practical applications.

The second video, "Scale Machine Learning Code with Dask | Dask Summit 2021," explores advanced strategies for scaling machine learning code, showcasing Dask's capabilities in handling large datasets.

Conclusion

Incremental training with Dask signifies a major leap forward in data science, offering a scalable solution for managing large datasets. By enabling data scientists to efficiently work with substantial volumes of data, Dask overcomes memory limitations and accelerates the model training process. As data continues to grow in size and complexity, tools like Dask will become increasingly essential in the data science toolkit, expanding the possibilities in machine learning and predictive analytics.